I was preparing to push some changes a couple of days ago and as I usually do, I ran the tests. I sat back in my chair as the dots raced across the screen when suddenly I noticed that one of the dots linger. "OS is probably running some updates in the background or something" I said to myself, and ran the tests again just to be sure. I watched closely as the dots filled the screen and there it was again - I have a slow test!

We are going to hunt down slow tests by reporting tests that take longer than a certain threshold.

The Basics

To get the ball rolling let's create a simple test case with a fast test and a slow test:

import time

import unittest

class SlowTestCase(unittest.TestCase):

def test_should_run_fast(self):

self.assertEqual(1, 1)

def test_should_run_slow(self):

time.sleep(0.5)

self.assertEqual(1, 1)

Running this script from the command line produces the following output:

$ python -m unittest timing.py

..

Ran 2 tests in 0.502s

OK

I'm sorry unittest, but this is definitely not OK - 0.5s for two tests?

To figure out which tests are slow we need to measure the time it takes each test to execute.

A python unittest.TestCase has hooks that execute in the following order:

> setUpClass

> setUp

> test_*

> tearDown

> tearDownClass

If we want to time a single test (test_*) we need to start a timer in setUp and stop it in tearDown:

import time

import unittest

class SlowTestCase(unittest.TestCase):

def setUp(self):

self._started_at = time.time()

def tearDown(self):

elapsed = time.time() - self._started_at

print('{} ({}s)'.format(self.id(), round(elapsed, 2)))

def test_should_run_fast(self):

self.assertEqual(1, 1)

def test_should_run_slow(self):

time.sleep(0.5)

self.assertEqual(1, 1)

This produces the following output:

$ python -m unittest timing.py

__main__.SlowTestCase.test_should_run_fast (0.0s)

.__main__.SlowTestCase.test_should_run_slow (0.5s)

.

Ran 2 tests in 0.503s

OK

Great! We got the timing for each test but we really want only the slow ones.

Let's say a slow test is a test that takes longer than 0.3s:

SLOW_TEST_THRESHOLD = 0.3

class SlowTestCase(unittest.TestCase):

# ...

def tearDown(self):

elapsed = time.time() - self._started_at

if elapsed > SLOW_TEST_THRESHOLD:

print('{} ({}s)'.format(self.id(), round(elapsed, 2)))

And the output:

> python -m unittest timing.py

.__main__.SlowTestCase.test_should_run_slow (0.5s)

.

Ran 2 tests in 0.503s

OK

Awesome! We got exactly what we wanted but it is still incomplete. We are good developers so we are most likely dead lazy. We don't want to go around and update every test case - we need a more robust solution.

The Runner

One of the roles of the TestRunner is to print test results to an output stream. The runner uses a TestResult object to format the results. The unittest module comes with a default TextTestRunner and TextTestResult.

Let's implement a custom TestResult to report slow tests:

import time

from unittest.runner import TextTestResult

SLOW_TEST_THRESHOLD = 0.3

class TimeLoggingTestResult(TextTestResult):

def startTest(self, test):

self._started_at = time.time()

super().startTest(test)

def addSuccess(self, test):

elapsed = time.time() - self._started_at

if elapsed > SLOW_TEST_THRESHOLD:

name = self.getDescription(test)

self.stream.write("\n{} ({:.03}s)\n".format(name, elapsed))

super().addSuccess(test)

Almost identical to what we already have but using different hooks. Instead of setUp we use testStart and instead of tearDown we use addSuccess.

The built-in TextTestRunner uses TextTestResult. To use a different TestResult we create an instance of TextTestRunner with our runner:

from unittest import TextTestRunner

if __name__ == '__main__':

test_runner = TextTestRunner(resultclass=TimeLoggingTestResult)

unittest.main(testRunner=test_runner)

And the output:

$ python runner.py

.

test_should_run_slow (__main__.SlowTestCase) (0.501s)

.

Ran 2 tests in 0.501s

OK

We get a nice report without having to make any changes to existing test cases.

Can We Do Better?

Right now we have a bunch of messages sprinkled around in random places across the screen. What if we could get a nice report with all the slow tests? Well, we can!

Let's start by making our TestResult store the timings without reporting them:

import time

from unittest.runner import TextTestResult

class TimeLoggingTestResult(TextTestResult):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.test_timings = []

def startTest(self, test):

self._test_started_at = time.time()

super().startTest(test)

def addSuccess(self, test):

elapsed = time.time() - self._test_started_at

name = self.getDescription(test)

self.test_timings.append((name, elapsed))

super().addSuccess(test)

def getTestTimings(self):

return self.test_timings

The test result now holds a list of tuples containing the test name and the elapsed time. Moving over to our custom TestRunner:

# common/test/runner.py

import unittest

class TimeLoggingTestRunner(unittest.TextTestRunner):

def __init__(self, slow_test_threshold=0.3, *args, **kwargs):

self.slow_test_threshold = slow_test_threshold

return super().__init__(resultclass=TimeLoggingTestResult, *args, **kwargs)

def run(self, test):

result = super().run(test)

self.stream.writeln("\nSlow Tests (>{:.03}s):".format(self.slow_test_threshold))

for name, elapsed in result.getTestTimings():

if elapsed > self.slow_test_threshold:

self.stream.writeln("({:.03}s) {}".format(elapsed, name))

return result

Let's break it down:

- We've replaced

SLOW_TEST_THRESHOLDwith a parameter to the init - Much cleaner. - We've set the appropriate TestResult class.

- We've override run and add our custom "slow test" report.

This is what the output looks like (I added some slow tests to illustrate):

> python timing.py

.....

Ran 5 tests in 1.706s

OK

Slow Tests (>0.3s):

(0.501s) test_should_run_slow (__main__.SlowTestCase)

(0.802s) test_should_run_very_slow (__main__.SlowTestCase)

(0.301s) test_should_run_slow_enough (__main__.SlowTestCase)

Now that we have the timing data we can use that to generate interesting reports. We can sort by elapsed time, show potential time reduction and highlight sluggish tests.

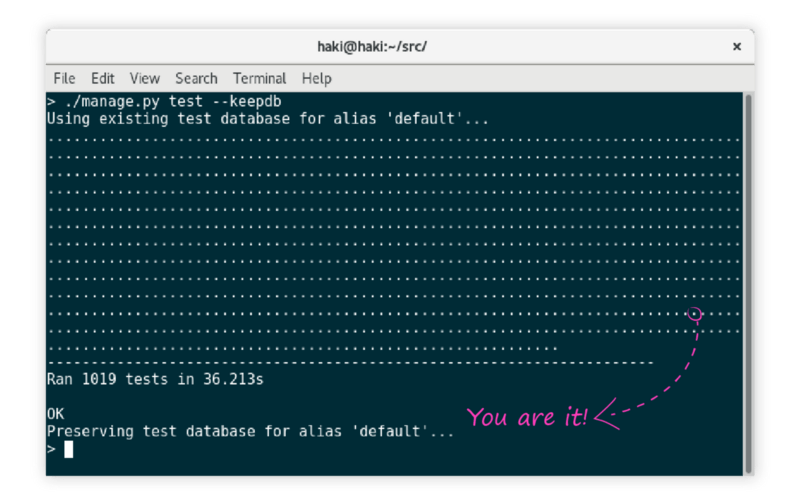

How To Use This With Django

Django has its own test runner so we need to make some adjustments:

# common/test/runner.py

from django.test.runner import DiscoverRunner

# ...

class TimeLoggingTestRunner(DiscoverRunner):

def get_resultclass(self):

return TimeLoggingTestResult

And to make Django use our custom runner we set the following:

# settings.py

TEST_RUNNER = 'common.tests.runner.TimeLoggingTestRunner'

Go make some tests faster!