"Get or create" is a very common operation for syncing data in the database, but implementing it correctly may be trickier than you may expect. If you ever had to implement it in a real system with real-life load, you may have overlooked potential race conditions, concurrency issues and even bloat!

In this article I explore ways to "get ot create" in PostgresSQL.

Table of Contents

Implementing "Get or Create"

Say you have a system that allows users to tag items. You set up the following tags table:

db=# CREATE TABLE tags (

id INT GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

name VARCHAR(50) NOT NULL

);

CREATE TABLE

The table contains an auto incrementing primary key and a name. To make sure tags are reused, you add a unique constraint on the name:

db=# ALTER TABLE tags ADD CONSTRAINT tags_name_unique UNIQUE(name);

ALTER TABLE

Adding tags to the table is simple:

db=# INSERT INTO tags (name) VALUES ('A'), ('B') RETURNING *;

id │ name

────┼──────

1 │ A

2 │ B

(2 rows)

INSERT 0 2

If someone tries to add a tag with a name which already exists, they will get an error:

db=# INSERT INTO tags (name) VALUES ('B') RETURNING *;

ERROR: duplicate key value violates unique constraint "tags_name_key"

DETAIL: Key (name)=(B) already exists.

The tag "B" already exists, so the command failed due to unique constraint violation.

When our application tags an item it doesn't care if the tag already exists or not in the database. The application wants the process to be idempotent - providing the same input (tag names) should always produce the same output (the tag objects). A plain INSERT is not idempotent because executing the command with the same input for the second time will trigger an error:

- ❌ The process is not idempotent

To provide idempotency we need to handle two situations:

- If the tag already exists - return the existing tag.

- If the tag does not already exist - create it and return the name and the newly assigned ID.

This type of syncing functionality is often called "get or create".

"UPSERT" vs. "get or create"

"update or insert" (aka "upsert") is different from "get or create". In an "upsert" we update matching row that already exist in the table, while in "get or create" we want to avoid changing existing data. As we'll see in this article, this restriction has significant implications on the implementation.

Providing Idempotency

As we've already seen, if we try to insert a tag which already exists we fail with a unique constraint violation. To make the process idempotent we need to only create tags that don't already exist, and return any tags that do.

Let's adjust our process to check if a tag already exist before we create it:

CREATE OR REPLACE FUNCTION get_or_create_tag(tag_name tags.name%TYPE)

RETURNS SETOF tags AS $$

DECLARE

tag_to_return tags%ROWTYPE;

BEGIN

SELECT * INTO tag_to_return FROM tags WHERE name = tag_name;

IF FOUND THEN

RETURN NEXT tag_to_return;

ELSE

RETURN QUERY INSERT INTO tags (name) VALUES (tag_name) RETURNING *;

END IF;

END;

$$ LANGUAGE plpgsql;

The function first looks for an existing tag with the same name. If it finds a tag it returns it, otherwise it create it and then returns it. The function returns a set of rows and it can be used like this:

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

(2 rows)

db=# SELECT * FROM get_or_create_tag('C');

id │ name

────┼──────

3 │ C

(1 row)

db=# SELECT * FROM get_or_create_tag('C');

id │ name

────┼──────

3 │ C

(1 row)

The first time we execute the function with the tag name "C", the tag is created and assigned ID 3. The second time we attempt to get or create "C", the tag already exists and we get the same tag with ID 3. The function is idempotent!

This function can be written in any language really, but thanks to the magic of plpgsql set returning functions we can also use this function to "get or create" multiple tags at once:

db=# SELECT tags.*

FROM

(VALUES ('B'), ('C'), ('D')) AS new(tag),

get_or_create_tag(tag) AS tags;

id │ name

────┼──────

2 │ B

4 │ C

5 │ D

(3 rows)

To create multiple tags at once we produce a set of rows using a VALUES clause, and then join it to our function using the tag name as parameter.

This approach achieves our first goal:

- ✅ The process is idempotent

Executing this function with the same input will always produce the same output. However, there is one subtle issue that can easily go unnoticed.

Handling a Potential Race

The function we came up with looks innocent enough to pass most code reviews, but in-fact, there is a subtle edge case that can cause this function to fail.

If two concurrent processes attempt to use the function to "get or create" the same tag at the same time, we can end up getting a unique constraint violation. Consider the following scenario where two processes, A and B, attempt to insert a new tag "foo":

| Time | Process A | Process B |

|---|---|---|

| T | Check if "foo" exists Tag does not exist |

|

| T + 1 | Check if "foo" exists Tag does not exist |

|

| T + 2 | Insert "foo" ✅ Success |

|

| T + 3 | Insert "foo" 💥Unique constraint violation |

In the race described above, both processes check if the tag exists and finds that it does not exists. Then, both processes proceed to create the tag name. Process A creates the tag first and as a result, process B fails with a unique constraint violation.

Inspired by Django's get_or_create implementation, we can handle the potential unique constraint violation:

CREATE OR REPLACE FUNCTION get_or_create_tag(tag_name tags.name%TYPE)

RETURNS SETOF tags AS $$

DECLARE

tag_to_return tags%ROWTYPE;

BEGIN

SELECT * INTO tag_to_return FROM tags WHERE name = tag_name;

IF FOUND THEN

RETURN NEXT tag_to_return;

ELSE

BEGIN

RETURN QUERY INSERT INTO tags (name) VALUES (tag_name) RETURNING *;

EXCEPTION WHEN unique_violation THEN

RETURN QUERY SELECT * FROM tags WHERE name = tag_name;

END;

END IF;

END;

$$ LANGUAGE plpgsql;

To address the potential race we now catch an exception in the insert command. If a unique constraint violation occurred we assume another process had already inserted the row, so we fetch and return it from the table.

This approach provides us with idempotency and addresses potential races:

- ✅ The process is idempotent

- ✅ The process is safe to execute concurrently

However, under some circumstances this approach may be wasteful.

Using Unique Constraint Violations

Our previous approach is idempotent and safe to execute by multiple processes at the same time, but it comes at a cost. When we add a tag that already exists we only run one query - this is good. However, for new tags we run at least two queries - one to check if it already exists and another to insert it. If most tags are new, this approach is wasteful.

If we expect most tags to be new tags, we can adjust our implementation to remove the initial check:

CREATE OR REPLACE FUNCTION get_or_create_tag(tag_name tags.name%TYPE)

RETURNS SETOF tags AS $$

BEGIN

RETURN QUERY INSERT INTO tags (name) VALUES (tag_name) RETURNING *;

EXCEPTION WHEN unique_violation THEN

RETURN QUERY SELECT * FROM tags WHERE name = tag_name;

END;

$$ LANGUAGE plpgsql;

The function now attempts to insert a new tag straight away. If the insert fail due to unique constraint violation, it looks for the matching tag in the table and returns it:

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

3 │ C

(2 rows)

db=# SELECT * FROM get_or_create_tag('D');

id │ name

────┼──────

4 │ D

(1 row)

db=# SELECT * FROM get_or_create_tag('D');

id │ name

────┼──────

4 │ D

(1 row)

This approach achieve both our previous goals:

- ✅ The process is idempotent

- ✅ The process is safe to execute concurrently

One unfortunate nuisance of this approach is that every unique constraint violation is logged. If you are in the habit of skimming the database logs this can be very annoying.

Attempting to insert a tag and handle a potential unique constraint violation is idempotent and safe when executed by concurrent processes, but there is a hidden downside.

Abusing Unique Constraint Violations

To demonstrate the potential issue of relying on unique constraint violations we start by checking the current size of the table:

db=# SELECT pg_size_pretty(pg_relation_size('tags'));

pg_size_pretty

────────────────

8192 bytes

Very small. Next, disable "autovacuum" on the table (more on that later) and insert 50K tags that already exist:

db=# ALTER TABLE tags SET (autovacuum_enabled = false);

SET

db=# \timing on

Timing is on.

db=# SELECT get_or_create_tag('A') FROM generate_series(1, 50000);

get_or_create_tag

───────────────────

(1,A)

(1,A)

...

(1,A)

(1,A)

(50000 rows)

Time: 12077.786 ms (00:12.078)

Attempting to insert 50K tags which already exist in the table took ~12 seconds to complete, pretty slow. Check the size of the table again:

db=# SELECT pg_size_pretty(pg_relation_size('tags'));

pg_size_pretty

────────────────

1776 kB

(1 row)

The table is now more than 200 times bigger! Keep in mind that we only attempted to insert tags that were already there, meaning no new data was actually added to the table. So where did this extra size comes from? Querying the number of dead tuples in the table provides a hint:

db=# SELECT relname, n_dead_tup, n_live_tup

FROM pg_stat_user_tables

WHERE relname = 'tags';

relname │ n_dead_tup │ n_live_tup

─────────┼────────────┼────────────

tags │ 50000 │ 3

(1 row)

The table contains a dead tuple for every attempt to insert a tag that already existed. This article by the Amazon RDS team explains the issue pretty well - in an INSERT command, the row is first added to the table (heap), and only then checked for potential unique constraint violations. If a duplicate is found, an exception is raised and the row is marked dead. When a row is marked dead it occupies space until the table is vacuumed. These dead rows cause "bloat" which makes the table swell beyond its intrinsic size.

To clear the bloat we can manually vacuum the table:

db=# VACUUM tags;

VACUUM

db=# SELECT relname, n_dead_tup, n_live_tup

FROM pg_stat_user_tables

WHERE relname = 'tags';

relname │ n_dead_tup │ n_live_tup

─────────┼────────────┼────────────

tags │ 0 │ 2

(1 row)

db=# SELECT pg_size_pretty(pg_relation_size('tags'));

pg_size_pretty

────────────────

8192 bytes

(1 row)

After vacuuming, the dead rows are cleared and the table goes back to its original size. The database has a background process called "autovacuum" which triggers vacuum automatically when certain thresholds are met. We disabled it for our experiment to demonstrate the issue.

Clearing bloat

I wrote about how to clear bloat in tables and indexes.

This approach now achieves our first goals but suffers from a new issue:

- ✅ The process is idempotent

- ✅ The process is safe to execute concurrently

- ❌ The process generates bloat

Bloat is something we want to avoid, so next, we'll explore ways to "get or create" without generating bloat.

Understanding Sub-Statement Visibility

In processes where duplicates rarely happen, it's perfectly fine to rely on catching unique constraint violations to implement "get or create" functionality. However, in processes where duplicates happen a lot, the bloat can end up consuming a lot of space and put strain on the database.

To avoid the unnecessary bloat in this case we can avoid triggering unique constraint violations in the first place. In our first attempt we did that by checking if the tag exists before we tried to insert it. We did that using a separate query, but there is another way.

Consider the following query to create tags only if they don't already exist:

db=# INSERT INTO tags (name)

SELECT name

FROM (VALUES ('B'), ('C')) AS t(name)

WHERE NOT EXISTS (

SELECT 1

FROM tags

WHERE tags.name = t.name

)

RETURNING *;

id │ name

────┼──────

4 │ C

(1 row)

The tag "B" already exist in the table so we don't try to insert it. As a result, we only get the tag that did not already exist, the tag "C".

To get both existing and new tags, we can execute INSERT in a WITH clause (aka common table expression, CTE) and then query the table for both the new and existing tags:

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

(2 rows)

db=# WITH new_tags AS (

INSERT INTO tags (name)

SELECT name

FROM (VALUES ('B'), ('C')) AS t(name)

WHERE NOT EXISTS (

SELECT 1

FROM tags

WHERE tags.name = t.name

)

)

SELECT *

FROM tags

WHERE name IN ('B', 'C');

id │ name

────┼──────

2 │ B

This is strange! In the WITH clause we insert the tag "C", but when we query the tags table immediately after, we only get the tag "B". Does this mean tag "C" was not inserted? Let's check:

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

3 │ C

(3 rows)

Selecting from the table after the command completed shows both tags "B" and "C" are present in the table, so why did we only get "B"? The documentation explains:

The sub-statements in

WITHare executed concurrently with each other and with the main query.

Our "main" SELECT can't "see" modifications we made in the WITH clause - this is why we didn't get the new tag "C", only the existing tag "B". The documentation continue to explain the risks of relying on the results of data-modifying statements executed in WITH:

Therefore, when using data-modifying statements in

WITH, the order in which the specified updates actually happen is unpredictable. All the statements are executed with the same snapshot [...], so they cannot “see” one another's effects on the target tables.

This means that any changes to the tags table is not visible to other parts of the query until the query completes. However, this dosen't mean we can't use the results of WITH sub-statements:

RETURNINGdata is the only way to communicate changes between different WITH sub-statements and the main query.

Consider the following example:

db=# WITH new_tags AS (

INSERT INTO tags (name)

SELECT name

FROM (VALUES ('B'), ('C')) AS t(name)

WHERE NOT EXISTS (

SELECT 1

FROM tags

WHERE tags.name = t.name

)

RETURNING *

)

SELECT * FROM new_tags;

id │ name

────┼──────

3 │ C

(1 row)

The main SELECT query references the result of the WITH clause instead of querying tags again, and we see the newly added tag "C". If we adjust the query to get new tags from the result of the WITH, and existing tags from the target table, we should get what we want:

db=# WITH

new_tags AS (

INSERT INTO tags (name)

SELECT name

FROM (VALUES ('B'), ('C')) AS t(name)

WHERE NOT EXISTS (

SELECT 1

FROM tags

WHERE tags.name = t.name

)

RETURNING *

)

SELECT * FROM tags WHERE name IN ('B', 'C')

UNION ALL

SELECT * FROM new_tags;

id │ name

────┼──────

2 │ B

3 │ C

(2 rows)

Let's break it down:

-

Insert the new tags into the table: exclude existing tags to avoid unique constraint violations, create new tags and return the inserted rows. The result from

WITHdepends on the data - it may result in multiple rows, one row or none if all the tags already exist. -

Query existing tags: we don't know in advance which tags already exist, so we query for all matching tags.

-

Query new tags: new tags that were added during the query are not visible yet so we use the results of the

WITHclause.

UNION vs. UNION ALL

Both UNION and UNION ALL concatenate results but UNION also eliminate duplicates which often require a potentially expensive sort or hash operation. Our query already guarantees there can't be duplicates in the concatenated result sets, so UNION ALL is more efficient.

This query now achieves two of our goals:

- ✅ The process is idempotent

- ✅ The process doesn't generate bloat

- ⚠️ Is the process safe to execute concurrently?

Next, we'll check how this approach holds up when executed by multiple concurrency processes.

Avoiding Concurrency Issues

So far we were able to avoid bloat by preventing unique constraint violations, and worked around visibility issues by concatenating results from the target table itself and the results of a WITH clause using RETURNING. To be honest, when I originally tackled this issue I stopped here, but then smarter people pointed out that there might be some concurrency issues with this approach as well. In other words, if many users will attempt to "get or create" at the same time using this approach, they may encounter unexpected errors.

To test concurrent execution, we adjust the command to insert multiple random tags:

-- get_or_create.sql

WITH

-- Generate a random amount of random tags

tags_to_insert AS (

SELECT 'tag' || round(random() * 1000) AS name

FROM generate_series(0, (random() * 10)::int)

),

-- From here on it's roughly the same...

new_tags AS (

INSERT INTO tags (name)

SELECT name

FROM tags_to_insert t

WHERE NOT EXISTS (

SELECT 1

FROM tags

WHERE tags.name = t.name

)

RETURNING *

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN (

SELECT name

FROM new_tags

);

We generate a random number of random tags to insert - some already exist in the table and some don't. This is supposed to mimic the behavior of real users in a real busy system. Next, use pgbench to execute this concurrently:

$ pgbench --client=4 --time=30 -f get_or_create.sql

pgbench (15.4)

pgbench: error: client 1 script 0 aborted in command 0 query 0: ERROR: duplicate key value violates unique constraint "tags_name_key"

DETAIL: Key (name)=(tag625) already exists.

pgbench: error: client 0 script 0 aborted in command 0 query 0: ERROR: duplicate key value violates unique constraint "tags_name_key"

DETAIL: Key (name)=(tag950) already exists.

pgbench: error: client 3 script 0 aborted in command 0 query 0: ERROR: duplicate key value violates unique constraint "tags_name_key"

DETAIL: Key (name)=(tag522) already exists.

pgbench: error: client 2 script 0 aborted in command 0 query 0: ERROR: duplicate key value violates unique constraint "tags_name_key"

DETAIL: Key (name)=(tag869) already exists.

[...]

pgbench: error: Run was aborted; the above results are incomplete.

It took just a fraction of a second before the script started failing with unique constraint violations! In a real system, this can cause some very strange errors.

We constructed our query to make sure that unique constraint violations can never happen, so how come they did? Apparently, it is possible that in the fraction of a millisecond between the time we checked that the tag does not exist and the time we actually inserted it into the table, another process managed to insert the same value, causing a unique constraint violation. This is similar to what we experienced in one of our first attempts, only this time it happens within a single query!

To recap, this approach achieves two goals but it suffers from one significant issue:

- ✅ The process is idempotent

- ✅ The process doesn't generate bloat

- ❌ The process is not safe to execute concurrently

Next, we'll explore ways to make the process safe when executed by many users at the same time.

Handling Conflicts Gracefully

In procedural programming languages there are two prominent coding styles:

-

"Look before you leap" (LBYL) dictates that you should explicitly test for pre-conditions before executing an operation. This style is popular in languages such as Java and C.

-

"Easier to ask for forgiveness than permission" (EAFP) suggests that it is better to attempt an operation and then handle any possible exception. This approach is encouraged in languages such as Python.

SQL is rarely talked about in terms of traditional programming languages but we can actually classify both of our previous approaches to these coding styles:

-

INSERT,EXCEPT,SELECT: In our first approach we tried to insert a new tag without checking anything in advance, and then handled a unique constraint violation. This is "asking for forgiveness". -

INSERT WHERE NOT EXISTS: In our second approach we tried to avoid unique constraint violations by checking that values don't exist before we attempted to insert. This is "look before you leap".

"Look before you leap" approaches often suffer from an issue called "time-of-check to time-of-use". This issue arises when the underlying data changes between the time we check a certain condition and the time we rely on it. In our case, we check that the tag don't already exists ("time-of-check") and then rely on this check when we attempt to create it ("time-of-use"), but another process changed the underlying data in the meantime (added a tag by the same name), causing our process to fail.

time-of-check time-of-use

Check out Handling Concurrency Without Locks for more about the "time-of-check time-of-use" problem and ways to overcome it.

If that's the case then, what would be the equivalent of "asking for forgiveness" in SQL?

To handle our "TOC-TOU" problem, we can resort to a special clause in the INSERT statement for handling conflicts:

db=# INSERT INTO tags (name) VALUES ('B'), ('C')

ON CONFLICT (name) DO NOTHING;

INSERT 0 1

The ON CONFLICT clause allows us to declare what should happen in case a row we are trying to insert encounters a conflict. In this case, we declared that if we encounter a conflict in a tag's name, we should DO NOTHING. By ignoring conflicting rows our command did't fail with a unique constraint violation. Instead, it completed successfully and inserted 1 row.

Next, let's try to add a RETURNING clause:

db=# INSERT INTO tags (name) VALUES ('B'), ('C')

ON CONFLICT (name) DO NOTHING

RETURNING *;

id │ name

────┼──────

4 │ C

(1 row)

INSERT 0 1

Two important things to notice here:

-

Only modified data is returned: the new tag "C" was returned but the existing tag "B" was not.

-

We have a gap in the sequence: PostgresSQL fetched the next value 3 from the sequence when it attempted to insert the value "B". A tag "B" already exists so there is a conflict. Because we defined

ON CONFLICT DO NOTHING, the statement carried on, discarded the sequence value 3 and did not fail. The next value to insert was "C" and the next value 4 was taken from the sequence. The value "C" does not exist in the table so a new tag with id 4 was created, and we are left with a gap.

Gap-less Sequences in PostgreSQL

PostgreSQL sequences are not guaranteed to be gap-less. This is just an observation, not a strict downside to this approach.

From the last attempt we reckon that PostgreSQL will not return a row that was not modified by the statement. The documentation for INSERT confirms:

Only rows that were successfully inserted or updated will be returned.

We already worked around this issue, so let's adjust the query to execute the INSERT in a WITH clause and then append the existing tags in the main query:

db=# WITH

new_tags AS (

INSERT INTO tags (name)

VALUES ('B'), ('C')

ON CONFLICT (name) DO NOTHING

RETURNING *

)

SELECT * FROM tags WHERE name IN ('B', 'C')

UNION ALL

SELECT * FROM new_tags;

id │ name

────┼──────

2 │ B

4 │ C

(2 rows)

This works as expected and achieves our first goal of providing idempotency - no matter how many times we execute this, we'll always get the same output.

To check if our second goal of preventing unnecessary bloat is achieved we once again disable "autovacuum" and insert 50K tags that already exist:

db=# ALTER TABLE tags SET (autovacuum_enabled = false);

SET

-- Generate an array with 50K values 'A' and store it in a psql variable called "names"

db=# SELECT array_agg('A'::text) AS names FROM generate_series(1, 50000) \gset

db=# \timing on

Timing is on.

db=# WITH

new_tags AS (

INSERT INTO tags (name)

SELECT unnest(:'names'::text[])

ON CONFLICT (name) DO NOTHING

RETURNING *

)

SELECT * FROM tags WHERE name = ANY(:'names'::text[])

UNION ALL

SELECT * FROM new_tags;

id │ name

────┼──────

1 │ A

(1 row)

Time: 96.028 ms

No tags were actually added and the statement completed very quickly. Check the size and the number of dead tuples:

db=# SELECT pg_size_pretty(pg_relation_size('tags'));

pg_size_pretty

────────────────

8192 bytes

(1 row)

db=# SELECT relname, n_dead_tup, n_live_tup

FROM pg_stat_user_tables

WHERE relname = 'tags';

relname │ n_dead_tup │ n_live_tup

─────────┼────────────┼────────────

tags │ 0 │ 2

Size is the same and no dead tuples - our second goal of preventing unnecessary bloat is also achieved.

speculative insertion

The ON CONFLICT clause is using a different infrastructure for handling conflicts called "speculative insertion". Unlike "regular" insertion, using "speculative insertion" a pre-check of unique and exclusion constraints is made before the row is inserted into the table, thus preventing bloat in case of violation. The original commit message offers more details about this infrastructure.

Finally, To check if the process is also safe when executed by many users concurrently, we prepare a script to insert random amount of random tags into the table:

-- get_or_create_on_conflict.sql

WITH

-- Generate a random amount of random tags

tags_to_insert AS (

SELECT 'tag' || round(random() * 1000) AS name

FROM generate_series(0, (random() * 10)::int)

),

-- From here on it's roughly the same...

new_tags AS (

INSERT INTO tags (name)

SELECT name FROM tags_to_insert

ON CONFLICT DO NOTHING

RETURNING *

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN (SELECT name FROM tags_to_insert);

Execute with pgbench:

$ pgbench --client=4 --time=30 -f get_or_create_on_conflict.sql

pgbench (15.4)

end.

transaction type: get_or_create_on_conflict.sql

scaling factor: 1

query mode: simple

number of clients: 4

number of threads: 1

maximum number of tries: 1

duration: 30 s

number of transactions actually processed: 109499

number of failed transactions: 0 (0.000%)

latency average = 1.095 ms

initial connection time = 24.556 ms

tps = 3652.536348 (without initial connection time)

Great! Using ON CONFLICT we achieve 3 of our goals:

- ✅ The process is idempotent

- ✅ The process doesn't generate bloat

- ✅ The process is safe to execute concurrently

Next we'll look into a few more aspects of "get or create".

Taking a Wrong Turn

In all the solutions we came up with so far we essentially hit the table twice - once to insert new values and ignore conflicts, and another to get existing values. If you are anything like me, this really bugs you.

At some point I thought to myself "Hey! ON CONFLICT only return rows that were affected by the command, so what if instead of ignoring conflicts, I'll perform some meaningless update to "affect" them so they will be returned.". This is what I came up with:

db=# INSERT INTO tags (name) VALUES ('B'), ('C')

ON CONFLICT (name) DO UPDATE SET id = EXCLUDED.id

RETURNING *;

id │ name

────┼──────

3 │ B

4 │ C

(2 rows)

INSERT 0 2

Look at that... so elegant and gets the job done! Now, when we encounter a tag that already exist we update the id to its current value, a meaningless update that will never actually change the data, BUT, will cause every affected row to be updated and returned by RETURNING *.

This approach supposedly touches a row just once, but it has a very significant downside!

In PostgreSQL, when you update a row you essentially delete and insert a new row. The deleted row is marked as dead and consumes space until it is cleaned up by a vacuum process. We once again have a bloat issue!

To demonstrate how this approach can cause significant bloat, get the size of the table and its indexes before we do anything:

db=# SELECT

pg_size_pretty(pg_relation_size('tags')) table_size,

pg_size_pretty(pg_total_relation_size('tags') - pg_relation_size('tags')) indexes_size;

table_size │ indexes_size

────────────┼──────────────

8192 bytes │ 32 kB

(1 row)

Next, "get or create" tags 50K times using our "elegant" approach:

db=# ALTER TABLE tags SET (autovacuum_enabled = false);

ALTER TABLE

db=# DO $$

BEGIN

FOR i in 1..50000 loop

INSERT INTO tags (name) VALUES ('C'), ('D')

ON CONFLICT (name)

DO UPDATE SET name = EXCLUDED.name;

END LOOP;

END $$;

Time: 57331.141 ms (00:57.331)

This took a very long time (~1 minute), but the data barely changed:

db=# SELECT * FROM tags;

id │ name

────┼──────

1 │ A

2 │ B

3 │ C

4 │ D

Next, check the sizes of the table and its indexes after the operation:

db=# SELECT

pg_size_pretty(pg_relation_size('tags')) table_size,

pg_size_pretty(pg_total_relation_size('tags') - pg_relation_size('tags')) indexes_size;

table_size │ indexes_size

────────────┼──────────────

3544 kB │ 64 kB

That's a big difference! The size of the table is now ~50 times bigger and the indexes are twice as big. To clear up that bloat we need to vacuum the table and reindex the indexes:

db=# VACUUM FULL tags;

VACUUM

db=# REINDEX TABLE tags;

REINDEX

db=# SELECT

pg_size_pretty(pg_relation_size('tags')) table_size,

pg_size_pretty(pg_total_relation_size('tags') - pg_relation_size('tags')) indexes_size;

table_size │ indexes_size

────────────┼──────────────

8192 bytes │ 32 kB

(1 row)

This approach takes us back:

- ✅ The process is idempotent

- ❌ The process generates bloat

- ✅ The process is safe to execute concurrently

To recap, don't do it!

Avoiding Schema Changes

INSERT ON CONFLICT is a very powerful command, but it does have one limitation that's worth mentioning. To match rows, INSERT ON CONFLICT requires that the target table will have either a unique constraint or an exclusion constraint on the matched columns.

Imagine we don't have a unique constraint on the tag name:

db=# ALTER TABLE tags DROP CONSTRAINT tags_name_key;

ALTER TABLE

Now, if we try to sync tags using INSERT ON CONFLICT we'll get an error:

db=# INSERT INTO tags (name) VALUES ('B'), ('C') ON CONFLICT (name) DO NOTHING;

ERROR: there is no unique or exclusion constraint matching the ON CONFLICT specification

In some situations we do have a unique constraint on the columns we match by. In other situations we don't, or we are unable to because we want to match by complicated conditions that are hard to express using constraints. Exclusion constraints are useful for enforcing constraint on complicated conditions, but they also come with their own cost, namely having to maintain an index to enforce the constraint. In some environments such as data warehouses, maintaining indexes just to enforce constraints may be undesirable.

Starting with PostgreSQL 15 there is another option to sync data, the MERGE statement. There are some subtle differences between MERGE and INSERT ON CONFLICT which are relevant to this type of tasks:

INSERT ON CONFLICTcan only insert or update,MERGEcan also delete.

Imagine your input was a list of tags and an indication whether they were deleted or not in the source. You want to sync the data such that if a tag was deleted in the source, you either delete it from your target table or avoid creating it in the first place. Using INSERT ON CONFLICT you would have to break this up into multiple WITH clauses, but using MERGE it's possible with just one statement:

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

( 2 rows)

db=# MERGE INTO tags USING (VALUES

('A', false),

('B', true),

('C', false),

('D', true)

) AS t(name, deleted)

ON t.name = tags.name

WHEN MATCHED AND deleted THEN DELETE

WHEN MATCHED AND NOT deleted THEN DO NOTHING

WHEN NOT MATCHED AND deleted THEN DO NOTHING

WHEN NOT MATCHED AND NOT deleted THEN INSERT (name) VALUES (t.name);

MERGE 1

db=# TABLE tags;

id │ name

────┼──────

1 │ A

3 │ C

(2 rows)

That is beautiful! We handle four different scenarios in this MERGE command:

| Tag | Source | Target | Action |

|---|---|---|---|

| "A" | not deleted | present | skip (do nothing) |

| "B" | deleted | present | delete |

| "C" | not deleted | not present | insert |

| "D" | deleted | not present | ignore (do nothing) |

This type of syncing logic is very common in ETL processes so MERGE comes in very handy.

INSERT ON CONFLICTrequires a unique constraint on the matched rows,MERGEdoes not.

As we already saw, if we try to use INSERT ON CONFLICT without a constraint on the column we match by we'll get an error:

db=# INSERT INTO tags (name)

VALUES ('B'), ('C')

ON CONFLICT (name) DO NOTHING

ERROR: there is no unique or exclusion constraint

matching the ON CONFLICT specification

MERGE dosen't have this limitation:

db=# MERGE INTO tags USING (VALUES ('B'), ('C')) AS t(name)

ON tags.name = t.name

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name);

MERGE 0

db=# TABLE tags;

id │ name

────┼──────

1 │ A

2 │ B

4 │ C

(3 rows)

MERGE allows us to provide a condition to match by while INSERT ON CONFLICT only accepts columns or a constraint name. The ability to provide a condition rather than relying on a constraint is very powerful - it allows us to match on complicated conditions that cannot always be (easily) expressed using a unique or exclusion constraint. One example which comes to mind is syncing data into a slowly changing dimension.

Moving back to our tags table. The MERGE statement is not providing anything we can't do with INSERT ON CONFLICT. However, under some real-life circumstances we might want to use it if the syncing logic gets complicated. Unfortunately, the MERGE statement as of PostgreSQL 16 does not include a RETURNING clause so we can't use it in a WITH clause with RETURNING. This is about to change in PostgreSQL 17, when MERGE gains support for the RETURNING clause.

Starting at PostgreSQL 17 we should be able to use MERGE with RETURNING:

db=# WITH

new_tags AS (

MERGE INTO tags

USING (VALUES ('B'), ('C')) AS t(name)

ON tags.name = t.name

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name)

RETURNING tags.*

)

SELECT * FROM tags WHERE name IN ('B', 'C')

UNION ALL

SELECT * FROM new_tags;

id │ name

────┼──────

4 │ C

2 │ B

(2 rows)

The main advantage of using MERGE instead of INSERT ON CONFLICT is that it doesn't require any constraints on the target table. Here is a recap of this approach:

- ✅ The process is idempotent

- ✅ The process doesn't generate bloat

- ✅ The process is safe to execute concurrently

- ✅ The process doesn't require constraints

Did We Really Solve Idempotency and Concurrency?

This section was added on Aug 23 2024

After I posted this story I received many comments. Most from people who didn't know about the merge command and others sharing how they handle similar situations in their codebases. However, one keen eyed reader Tamir Sen, suggested that despite my best efforts there is still a situation where the solutions above may not provide idempotency and predictable results when executed concurrently.

Considering Transaction Isolation

Consider the following situation where two concurrent sessions attempt to insert a new tag "X":

-- Session 1

db=# BEGIN;

BEGIN

db=*# WITH new_tags AS (

INSERT INTO tags (name) VALUES ('X')

ON CONFLICT (name) DO NOTHING

RETURNING *

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN ('X');

id │ name

────┼──────

3 │ X

(1 row)

db=*#

-- Session 2

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=# BEGIN;

BEGIN

db=*# WITH new_tags AS (

INSERT INTO tags (name) VALUES ('X')

ON CONFLICT (name) DO NOTHING

RETURNING *

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN ('X');

-- blocked...

Session 1 inserts tag "X" and gets the new tag in return but does't commit immediately. Session 2 starts a transaction and attempts to insert the same tags "X". At this point session 2 is blocked by session 1.

Let's see what happens when session 1 commits:

-- Session 1

db=*# COMMIT;

COMMIT;

-- Session 2

-- blocked...

id │ name

────┼──────

(0 rows)

db=*#

When session 1 commits the lock is released and session 2 return no rows - this is not good! The tag 'X' was added to the table by session 1, but session 2 did not return it - this is not idempotent. If we provide the tag "X" we expect to get the tag "X" in return regardless of weather it was created or not.

Under READ COMMITED isolation level the database makes sure our session only sees data that was present when we started the session. This is why we don't get tag "X" in session 2 - the session started before session 1 commited the value to the table.

According to the documentation, PostgreSQL does't really provide READ UNCOMMITED isolation level

The SQL standard defines one additional level, READ UNCOMMITTED. In PostgreSQL READ UNCOMMITTED is treated as READ COMMITTED.

That's probably a good thing, but it also means we can't work around this issue by changing the isolation level either.

Inconsistencies Between MERGE and INSERT ... ON CONFLICT

To make things just a little bit worst, let's explore how MERGE behaves in this situation (tested on PostgreSQL 17 Beta 3):

-- Session 1

db=# BEGIN;

BEGIN

db=*# WITH new_tags AS (

MERGE INTO tags

USING (VALUES ('X')) AS t(name) ON t.name = tags.name

WHEN MATCHED THEN DO NOTHING

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name)

RETURNING tags.*

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN ('X');

id │ name

────┼──────

3 │ X

(1 row)

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*#

db=*# COMMIT;

COMMIT

-- Session 2

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=# BEGIN;

BEGIN

db=*# WITH new_tags AS (

MERGE INTO tags

USING (VALUES ('X')) AS t(name) ON t.name = tags.name

WHEN MATCHED THEN DO NOTHING

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name)

RETURNING tags.*

)

SELECT * FROM new_tags

UNION ALL

SELECT * FROM tags WHERE name IN ('X');

-- blocked...

ERROR: duplicate key value violates unique constraint "tags_name_unique"

DETAIL: Key (name)=(X) already exists.

Going through the same process with MERGE produces a different results - instead of returning nothing it fails with a unique constraint violation. This is an issue for two reasons:

-

Using

WHEN MATCHED DO NOTHINGcan cause unique constraint violation: It's not trivial that when explicitly handling the matched case inMERGEwe can cause a unique constraint violation. If I had to write code to sync two tables usingMERGEI would not think about handling a unique constraint violation exception (assuming thatMERGEhandles it). -

Inconsistent behavior between

MERGE WHEN MATCHED DO NOTHINGtoINSERT ON CONFLICT DO NOTHING: while intuitively the two are the same, under some circumstances they can produce different outcomes.

The documentation for MERGE mentions this briefly (emphesis mine):

When MERGE is run concurrently with other commands that modify the target table, the usual transaction isolation rules apply; see Section 13.2 for an explanation on the behavior at each isolation level. You may also wish to consider using

INSERT ... ON CONFLICTas an alternative statement which offers the ability to run anUPDATEif a concurrentINSERToccurs. There are a variety of differences and restrictions between the two statement types and they are not interchangeable.

Given the inconsistent behavior we are left with the obvious question, which result is desired in this case? Do we want non-idempotent results or an exception? If you ask me, I prefer the exception in this case. By silently returning non-idempotent results we risk unexpected and very hard to debug issues. It's better to crash once in this case and handle the unique constraint violation rather than risk ending up with incorrect data.

How MERGE Handles Conflicts Without Constraints

One of the selling points for MERGE was that it dosen't require a constraint on the matching columns. This is unlike INSERT ON CONFLICT that can't work without either a unique or exclusion constraint on the matched columns. Let's explore how MERGE handles conflicts in a concurrent situation when there is no constraint on the matched columns.

First, drop the unique constraint on the name:

db=# ALTER TABLE tags DROP CONSTRAINT tags_name_key;

ALTER TABLE

Now execute two concurrent MERGE statements to insert the same tag at the same time:

-- Session 1

db=# BEGIN;

BEGIN

db=*# MERGE INTO tags

USING (VALUES ('X')) AS t(name) ON t.name = tags.name

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name)

RETURNING tags.*;

id │ name

────┼──────

3 │ X

(1 row)

db=*#

-- Session 2

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=#

db=# BEGIN;

BEGIN

db=*# MERGE INTO tags

USING (VALUES ('X')) AS t(name) ON t.name = tags.name

WHEN NOT MATCHED THEN INSERT (name) VALUES (t.name)

RETURNING tags.*;

id │ name

────┼──────

4 │ X

(1 row)

db=*#

Previously, when we had a constraint, session 2 was blocked by session 1. However, now there is no constraint and session 2 ends up inserting another tag "X". This is happening because session 2 can't see the tag added by session 1 because it was not commited yet, so no conflict is detected and we end up with a duplicate tags "X".

Coming Full Circle

After going through this journey it seems like we reach the unfortunate conclusion that it may not be possible to guarantee idempotency in a highly concurrent environment using just a single SQL statement. So what can we do?

db=# BEGIN;

BEGIN

db=*# INSERT INTO tags (name) VALUES ('X') ON CONFLICT (name) DO NOTHING

INSERT 1 0

db=*# COMMIT;

COMMIT

db=*# SELECT * FROM tags WHERE name IN ('X');

id │ name

────┼──────

3 │ X

(1 row)

That's not very sexy, but it checks almost all the boxes:

- ✅ The process is idempotent

- ✅ The process doesn't generate bloat

- ✅ The process is safe to execute concurrently

- ❌ The process doesn't require constraints

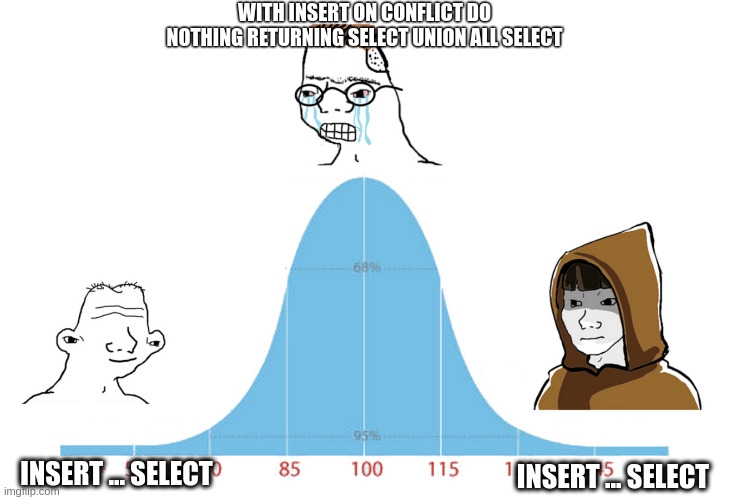

I'm not a big fan of memes, but this one seems appropriate in this case:

Summary

"Get or create" is a very common operation in both OLTP and data warehouse systems. However, as we've seen in this article, implementing it correctly may be trickier than you might think.

Comparison

We covered the following aspects of implementing "get or create" in PostgreSQL:

-

Idempotency: The process should provide the same output regardless of the state of the data. If the tags we wish to insert exist or not in the table, should not affect the outcome of the operation.

-

Concurrency: The process should be safe to execute under heavy load. The fact many connections are executing the process at the same time should not affect the outcome of the operation.

-

Bloat: The process should produce the desired result with as little side effects as possible. Generating bloat has significant affect on overall performance and may come at a significant storage and maintenance cost.

-

Constraints: The process should require as little schema changes as possible. As we've seen, some operations require unique or exclusion constraints which may be undesirable or even impossible.

Here is a table to summarize the approaches we presented:

| Approach | Idempotent | Concurrency | Bloat | Constraint |

|---|---|---|---|---|

INSERT |

❌ | - | ✅ | ❌ |

SELECT INSERT |

✔️ | ❌ | ✅ | - |

SELECT INSERT SELECT |

✔️ | ❌ | ✅ | ❌ |

INSERT EXCEPT SELECT |

✔️ | ✔️ | ❌ | ❌ |

INSERT WHERE NOT EXISTS |

✔️ | ❌ | ✅ | ✅ |

INSERT ON CONFLICT DO NOTHING |

✔️ | ✔️ | ✅ | ❌ |

INSERT ON CONFLICT DO UPDATE |

✅ | ✅ | ❌ | ❌ |

MERGE RETURNING |

✔️ | ✔️ | ✅ | ✅ |

INSERT ON CONFLICT DO NOTHING COMMIT SELECT |

✅ | ✅ | ✅ | ❌ |

✔️ Not safe under the situations described in this section but otherwise OK.

Takeaways

Here are some of the takeaways from our process to find the ideal "get or create" solution in PostgreSQL:

-

Unique constraint violations can cause bloat: new or updated rows are first written to the table and only then checked for duplicates. If there is a violation, the row is marked as dead which causes bloat. To avoid bloat use

INSERT ON CONFLICTinstead. -

WITHclauses are not executed in the order in which they are written: they are executed concurrently with each other. -

WITHclauses do not guarantee complete consistency: changes to the underlying data between differentWITHclauses can cause concurrency issues. -

Changes to the table in a

WITHclause are not visible to other parts of the query: due to visibility rules, all parts of the query see the table as is was when the query began. The only way to communicate changes between parts of a query is using theRETURNINGclause. -

INSERT ON CONFLICTrequires unique or exclusion constraint on the matching columns: to make use of theON CONFLICTcause you must have either a unique or an exclusion constraint on the fields you match by. If this is not the case, useMERGEinstead. -

MERGED WHEN MATCHED DO NOTHINGcan cause unique constraint violation: when two concurrent session attempt to insert the same value at the same time, a match can't be detected and one of the sessions will fail with a unique constraint violation. -

MERGE WHEN MATCHED DO NOTHINGdoes not guaranty no duplicates: when two concurrent session attempt to insert the same value at the same time and there is no unique constraint on the matched columns, a match can't be detected and both session will insert the value, resulting in duplicate values in the table. -

MERGE WHEN MATCHED DO NOTHINGis not equivalent toINSERT ON CONFLICT DO NOTHING: when used without a constraint MERGE may wnd us inserting duplicate values whileINSERT ON CONFLICTrequires a constraint and therefor will not produce duplicate results.