Constraints keep the integrity of your system and prevent you from shooting yourself in the foot. Foreign keys are a special type of constraint because, unlike unique, check, and primary keys, they span more than one relation. This makes foreign keys harder to enforce and harder to get right.

In this article, I demonstrate common pitfalls, potential optimizations, and implicit behavior related to foreign keys.

Table of Contents

- Naive Implementation

- Enhanced Implementation

- Replacing unique_together

- Identifying Duplicate Indexes

- Identifying Blocking Migrations

- Safely Migrating Foreign Key

- Reversible Migration Operations

- Concurrent Index Operations

- Indexes on Foreign Keys

- Partial Foreign Key Indexes

- Using Built-in Concurrent Index Operations

- Order Migration Operations

- Locking Across Relations

- Permissive No Key Locks

- The Final Model

- Takeaways

Watch

📺 This article is inspired by a talk I gave at DjangoCon EU. Watch it here.

Naive Implementation

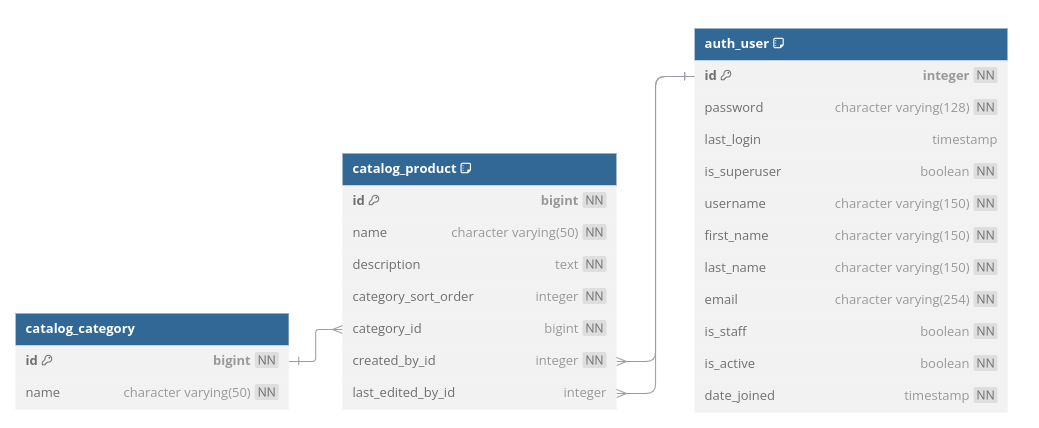

Imagine a simple application to manage a product catalog:

The first table, or model, in the catalog is the Category model:

class Category(models.Model):

id: int = models.BigAutoField(primary_key=True)

name: str = models.CharField(max_length=50)

Categories can be "household items", "fruit", "apparel", and so on.

Next, a model to store products:

class Product(models.Model):

class Meta:

unique_together = (

('category', 'category_sort_order'),

)

id = models.BigAutoField(primary_key=True)

name = models.CharField(max_length=50)

description = models.TextField()

category = models.ForeignKey(to=Category, on_delete=models.PROTECT, related_name='products')

category_sort_order = models.IntegerField()

created_by = models.ForeignKey(to=User, on_delete=models.PROTECT, related_name='+')

last_edited_by = models.ForeignKey(to=User, on_delete=models.PROTECT, related_name='+', null=True)

Products have a name and description, and they are associated with a category by foreign key.

To make sure we present products in the right order in the UI, we add a sort order within the category. To make sure the order is deterministic, we add a unique constraint to prevent products in the same category from having the same sort order.

Finally, we added two columns to keep track of the user who created the product and the user who last edited the product. This is mostly for auditing purposes.

Since we are on the subject of foreign keys, here are the 3 foreign keys we have in this very simple product table:

category: foreign key to the catalog model we created.created_by: foreign key to the user model.last_edited_by: foreign key to the user model. Can be null.

This naive implementation, as the name suggests, is very naive! There is a lot more than meets the eye. In the next sections, we'll make adjustments to improve this model.

Enhanced Implementation

It's been said that developers spend more time reading code than writing code. As someone who has spent a fair amount of time giving code reviews and going through code to understand how it works, I believe this to be true. We started with a naive implementation and now we'll review the code and make it better.

Replacing unique_together

To control the order we present products in the UI we added a category_sort_order to each product. To make sure two products in the same category don't have the same value, we added a unique constraint on the combination of category and category_sort_order:

class Product(models.Model):

class Meta:

unique_together = (

('category', 'category_sort_order'),

)

The Django documentation on unique_together includes a special note we should consider:

Use UniqueConstraint with the constraints option instead. UniqueConstraint provides more functionality than unique_together. unique_together may be deprecated in the future.

Unique together is deprecated and discouraged, so let's replace it with a unique constraint:

@@ -13,9 +13,15 @@ class Category(models.Model):

class Product(models.Model):

class Meta:

- unique_together = (

- ('category', 'category_sort_order', ),

- )

+ constraints = [

+ models.UniqueConstraint(

+ name='product_category_sort_order_uk',

+ fields=(

+ 'category',

+ 'category_sort_order',

+ ),

+ ),

+ ]

Unique constraint is easier to modify, allows to use advanced B-Tree index features (we'll use some later) and is recommended by the documentation, so let's always use that!

💡 Takeaway

Don't use unique_together, use UniqueConstraint instead.

Identifying Duplicate Indexes

Now that we got rid of unique_together, let's have a look at the schema:

catalog=# \d catalog_product

Column │ Type

─────────────────────┼───────────────────────

id │ bigint

name │ character varying(50)

category_sort_order │ integer

category_id │ bigint

created_by_id │ integer

last_edited_by_id │ integer

Indexes:

"catalog_product_pkey" PRIMARY KEY, btree (id)

"catalog_product_category_id_35bf920b" btree (category_id)

"catalog_product_category_id_category_sort_order_b8206596_uniq" UNIQUE CONSTRAINT, btree (category_id, category_sort_order)

"catalog_product_created_by_id_4e458b98" btree (created_by_id)

"catalog_product_last_edited_by_id_05484fb6" btree (last_edited_by_id)

-- ...

It's easy to get lost in all the information here, but notice that we have two indexes that are prefixed by category.

The first index is the unique constraint we just created:

class Product(models.Model):

class Meta:

constraints = [

models.UniqueConstraint(

name='product_category_sort_order_uk',

fields=('category', 'category_sort_order'),

),

]

The second index is not that obvious:

class Product(models.Model):

# ...

category = models.ForeignKey(

to=Category,

on_delete=models.PROTECT,

related_name='products',

)

Where is the index, you ask? The answer lies in the official documentation of the ForeignKey field:

A database index is automatically created on the ForeignKey.

When you define a foreign key, Django implicitly creates an index behind the scenes. In most cases, this is a good idea, but in this case, this field is already (sufficiently) indexed. Reading further in the documentation:

A database index is automatically created on the ForeignKey. You can disable this by setting

db_indextoFalse

If we believe that we don't need the index, we can instruct Django not to create it by setting db_index to False:

@@ -35,6 +35,8 @@ class Product(models.Model):

category = models.ForeignKey(

to=Category,

on_delete=models.PROTECT,

related_name='products',

+ # Indexed in unique constraint.

+ db_index=False,

)

Queries using category can use the unique index instead.

⚠️ Implicit Behavior

ForeignKey field implicitly creates an index unless explicitly setting db_index=False.

To actually remove the index, we first need to generate a migration:

$ ./manage.py makemigrations

Migrations for 'catalog':

demo/catalog/migrations/0003_alter_product_category.py

~ Alter field category on product

But before we move on to apply this migration, there is another small thing we need to take care of.

Identifying Blocking Migrations

Let's have a look at the migration we just generated to remove the index from the foreign key:

class Migration(migrations.Migration):

dependencies = [

('catalog', '0002_alter_product_unique_together_and_more'),

]

operations = [

migrations.AlterField(

model_name='product',

name='category',

field=models.ForeignKey(

db_index=False,

on_delete=django.db.models.deletion.PROTECT,

related_name='products',

to='catalog.category',

),

),

]

The migration looks harmless. Let's dig deeper and review the actual SQL generated by the migration:

$ ./manage.py sqlmigrate catalog 0003

BEGIN;

--

-- Alter field category on product

--

SET CONSTRAINTS "catalog_product_category_id_35bf920b_fk_catalog_category_id" IMMEDIATE;

ALTER TABLE "catalog_product" DROP CONSTRAINT "catalog_product_category_id_35bf920b_fk_catalog_category_id";

ALTER TABLE "catalog_product" ADD CONSTRAINT "catalog_product_category_id_35bf920b_fk_catalog_category_id"

FOREIGN KEY ("category_id") REFERENCES "catalog_category" ("id") DEFERRABLE INITIALLY DEFERRED;

COMMIT;

For the inexperienced eye, this might look OK, but if you really pay attention you'll notice that something very dangerous is going on here.

💡 Takeaway

Always check the SQL generated by migrations.

When we set db_index=False, what we wanted to do is to drop the index but keep the constraint. Unfortunately, Django is unable to detect this nuanced change, so instead, Django is re-creating the entire foreign key constraint without the index!

Re-creating the constraint works in two steps:

1. Dropping the existing constraint:

ALTER TABLE "catalog_product" DROP CONSTRAINT "catalog_product_category_id_35bf920b_fk_catalog_category_id";

The database will drop the index on the field and also stop validating the constraint. Dropping the index requires a lock on the table while the index is dropped. This can block some operations on the table.

2. Creating the constraint:

ALTER TABLE "catalog_product" ADD CONSTRAINT "catalog_product_category_id_35bf920b_fk_catalog_category_id"

FOREIGN KEY ("category_id") REFERENCES "catalog_category" ("id") DEFERRABLE INITIALLY DEFERRED;

The database first obtains a lock on the table. Then, to make sure the constraint is valid, it needs to make sure all the values in the column have a matching field in the referenced table. In this case, it checks that the product's category exists in the category table. Depending on the size of the tables, this can take some time and interfere with ongoing operations against the table.

⚠️ Implicit Behavior

When making changes to ForeignKey Django may implicitly re-create the constraint. This can require an extended locks and block certain operations on the table.

All of this is really not necessary though. We don't have anything against the constraint, what we really want is to keep the constraint as-is and only drop the index.

Safely Migrating Foreign Key

There are some types of changes to ForeignKey fields Django is (currently?) unable to detect. As a result, Django may end up re-creating the constraint in the database. This can lead to extended locking and may impact live systems. To avoid that, we need to change the way Django applies the migration. To understand how we can do that, we need to understand how Django generates migrations in the first place:

Generate migrations using makemigrations:

- Render a state from the existing migrations

- Compare to the desired state of the models in models.py

- Generate migration operations from the difference

Applying migrations using migrate:

- Generate SQL from the migration operations (

sqlmigrate) - Apply to SQL to the database

Let's break down the case of changing a foreign key:

- Django identify that a

ForeignKeyhas changed:db_indexchanged fromTrue->False - Django generates an operation to "sync" the new state: a

migrations.AlterFieldoperation with the new definition of theForeignKeyfield. - Generate SQL from the migration operation: To "sync" the foreign key Django drops the constraint and re-creates it.

Django identified the change to the field, but it's unable to generate a migration operation to just drop the index.

If the migration and the SQL generated by Django is not exactly what we want, there is a special operation called SeparateDatabaseAndState we can use:

A highly specialized operation that lets you mix and match the database (schema-changing) and state (autodetector-powering) aspects of operations.

Using SeparateDatabaseAndState we can provide one set of operations to execute against Django's internal state, and another set of operations to execute against the database:

@@ -11,9 +11,18 @@ class Migration(migrations.Migration):

]

operations = [

- migrations.AlterField(

- model_name='product',

- name='category',

- field=models.ForeignKey(db_index=False, on_delete=django.db.models.deletion.PROTECT, related_name='products', to='catalog.category'),

- ),

+ migrations.operations.SeparateDatabaseAndState(

+ state_operations=[

+ migrations.AlterField(

+ model_name='product',

+ name='category',

+ field=models.ForeignKey(db_index=False, on_delete=django.db.models.deletion.PROTECT, related_name='products', to='catalog.category'),

+ ),

+ ],

+ database_operations=[

+ migrations.RunSQL(

+ 'DROP INDEX catalog_product_category_id_35bf920b',

+ ),

+ ],

+ )

]

The SeparateDatabaseAndState migration operation accepts two arguments:

state_operations: operations to execute against the internal state when aggregating the changes from the migrations. In most cases, this will be the operations Django generated automatically frommakemigrations.database_operations: operations to execute in the database. This is where we control exactly what to execute in the database when the migration is applied usingmigrate. In our case, we useRunSQLto execute aDROP INDEXcommand.

To demonstrate, this is what will be executed when we apply this migration:

$ ./manage.py sqlmigrate catalog 0003

BEGIN;

--

-- Custom state/database change combination

--

DROP INDEX catalog_product_category_id_35bf920b;

COMMIT;

Exactly what we want!

Applying the migration:

$ ./manage.py migrate

Operations to perform:

Apply all migrations: catalog

Running migrations:

Applying catalog.0003_alter_product_category... OK

So are we done...?

Reversible Migration Operations

Say you applied the migration to drop the index and it went OK. A few minutes go by and then you realize you made a horrible mistake. You rush back to your laptop to reverse the migration:

$ ./manage.py migrate catalog 0002

Rendering model states... DONE

Unapplying catalog.0003_alter_product_category...

django.db.migrations.exceptions.IrreversibleError:

Operation <RunSQL ''> in catalog.0003_alter_product_category is not reversible

Oh no! You now have some explaining to do...

The reason you can't un-apply this migration is that the RunSQL command to drop the index did not include an opposite operation. This is an easy fix:

index 962c756..7ad45e0 100644

--- a/demo/catalog/migrations/0003_alter_product_category.py

+++ b/demo/catalog/migrations/0003_alter_product_category.py

@@ -22,6 +22,7 @@ class Migration(migrations.Migration):

database_operations=[

migrations.RunSQL(

'DROP INDEX catalog_product_category_id_35bf920b',

+ 'CREATE INDEX "catalog_product_category_id_35bf920b" ON "catalog_product" ("category_id")',

),

],

)

The second argument to RunSQL is the reverse operation - what to execute to undo the migration. In this case, to reverse dropping an index is to create an index!

But, where do you get this SQL from? Usually from the migration that added it. In this case, the initial migration:

$ ./manage.py sqlmigrate catalog 0001

...

BEGIN;

...

--

-- Create model Product

--

...

CREATE INDEX "catalog_product_category_id_35bf920b" ON "catalog_product" ("category_id");

...

Now if you found out you made a horrible mistake and you want to reverse the migration:

$ ./manage.py migrate catalog 0002

Rendering model states... DONE

Unapplying catalog.0003_alter_product_category... OK

Great!

💡 Takeaway

Provide reverse operations whenever possible - you don't know when you're going to need it.

Concurrent Index Operations

You now made sure to only drop the index without recreating the constraint and you provided a reverse operation in case you made a mistake and want to "undo". That's all great, but just one more thing... from PostgreSQL documentation on DROP INDEX:

A normal DROP INDEX acquires an ACCESS EXCLUSIVE lock on the table, blocking other accesses until the index drop can be completed.

To drop the index, PostgreSQL acquires a lock on the table which blocks other operations. If the index is tiny, that's probably fine, but what if it's a very big index? Dropping a big index can take some time and we can't lock a live table for very long.

PostgreSQL provides an option to create an index without acquiring restrictive locks on the index:

@@ -5,6 +5,7 @@ from django.db import migrations, models

class Migration(migrations.Migration):

+ atomic = False

dependencies = [

('catalog', '0002_alter_product_unique_together_and_more'),

@@ -21,7 +22,7 @@ class Migration(migrations.Migration):

],

database_operations=[

migrations.RunSQL(

- 'DROP INDEX catalog_product_category_id_35bf920b',

+ 'DROP INDEX CONCURRENTLY catalog_product_category_id_35bf920b',

- 'CREATE INDEX "catalog_product_category_id_35bf920b" ON "catalog_product" ("category_id")',

+ 'CREATE INDEX CONCURRENTLY "catalog_product_category_id_35bf920b" ON "catalog_product" ("category_id")',

),

],

Dropping an index concurrently works in two phases. First the index is marked as "deleted" in the dictionary table. During this time, ongoing transactions can still use it. Next, the index is actually dropped. This way of dropping indexes requires minimal locking but can take a bit more time.

💡 Takeaway

Use concurrent index operations in busy systems. Concurrent operations can take a bit more time but they don't block operations to the table while they execute.

Databases such as PostgreSQL that support transactional DDL, can execute CREATE, DROP and ALTER commands inside a database transaction. This is a very useful feature because it allows you to execute schema changes atomically (it also allows you to do some wild things like making indexes "invisible"). Unfortunately, concurrent operations cannot be executed inside a database transaction. This means we need to set the entire migration to be non-atomic by setting atomic=False.

In an atomic migration, if something fails in the middle, the entire transaction is rolled-back and it's like the migration never ran. In a non-atomic migration however, if something fails in the middle, you can end up with an incomplete execution and an inconsistent state. To reduce the risk of getting stuck with a half-applied migration, if you have operations such as drop/create index concurrently in the migration, it's best to split the migration and move these operations to a separate migration.

💡 Takeaway

To avoid incomplete migrations, move operations that can't be executed atomically to a separate migration.

This is the final migration:

class Migration(migrations.Migration):

atomic = False

dependencies = [

('catalog', '0002_alter_product_unique_together_and_more'),

]

operations = [

migrations.operations.SeparateDatabaseAndState(

state_operations=[

migrations.AlterField(

model_name='product',

name='category',

field=models.ForeignKey(db_index=False, on_delete=django.db.models.deletion.PROTECT, related_name='products', to='catalog.category'),

),

],

database_operations=[

migrations.RunSQL(

'DROP INDEX CONCURRENTLY catalog_product_category_id_35bf920b',

'CREATE INDEX CONCURRENTLY "catalog_product_category_id_35bf920b" ON "catalog_product" ("category_id")',

),

],

)

]

In this migration we prevented Django from re-creating the entire foreign key and instead only drop the index. This migration also uses concurrent index operations so it's safe to execute on a live system, and if you make a mistake it is also reversible.

Indexes on Foreign Keys

So far we handled the foreign key on category, so let's move on to the next foreign key in the model:

class Product(models.Model):

#...

created_by = models.ForeignKey(

to=User,

on_delete=models.PROTECT,

related_name='+',

)

Django will implicitly add an index on a ForeignKey field unless explicitly stated otherwise. Since we didn't define db_index=False on this field, Django created an index on created_by. But, do we really need it?

To answer this question, we need first to ask how this field is being used:

- Used primarily for audit purposes

- Products are rarely queried by the user who created them

From these two use-cases, it seems like no one is actually going to query the table by created_by, so this index is most likely unnecessary. However, there is another use for this index, which is not as obvious.

To demonstrate, let's create a user:

>>> haki = User.objects.create_user(

... username='haki',

... first_name='haki',

... last_name='benita',

... )

<User: haki>

Now turn on SQL logging on and delete the user we just created a second ago:

>>> haki.delete()

(0.438) SELECT * FROM "catalog_product" WHERE "catalog_product"."created_by_id" IN (102); args=(102,)

(0.002) SELECT * FROM "catalog_product" WHERE "catalog_product"."last_edited_by_id" IN (102); args=(102,)

(0.000) BEGIN;

(0.002) DELETE FROM "django_admin_log" WHERE "django_admin_log"."user_id" IN (102); args=(102,)

(0.001) DELETE FROM "auth_user_groups" WHERE "auth_user_groups"."user_id" IN (102); args=(102,)

(0.001) DELETE FROM "auth_user_user_permissions" WHERE "auth_user_user_permissions"."user_id" IN (102); args=(102,)

(0.001) DELETE FROM "auth_user" WHERE "auth_user"."id" IN (102); args=(102,)

(0.368) COMMIT;

(1, {'auth.User': 1})

A lot of things are happening here! Let's break it down:

- Django checks if there are products that were created by or last edited by this user.

- Django deletes any admin logs, group memberships and permissions associated with this user.

- Django actually deletes the user from the user tables.

- Django commits the transaction and then the database does all of these checks too!

This brings us to the next, less obvious way, indexes on foreign keys are being used - to validate the foreign key constraint. If the foreign key is defined with on_delete=PROTECT, the index is used to make sure there are no related objects referencing a specific object. In our case, products that reference the user we are about to delete. If the foreign key is defined with on_delete=CASCADE, the index is used to delete the related objects. In our case, deleting the user may also delete products referencing the user.

You may have noticed that Django delete() function returns a counter-like structure that keeps how many objects were deleted for each type of model. This is why despite the fact the database also does all of these checks, Django is also doing them. The indexes on the foreign key are working extra-hard here.

💡 Takeaway

Indexes on foreign keys are used indirectly when deleting related objects. Removing these indexes may cause unexpected and hard to debug performance issues with deletes.

Now that we know this index is in-fact necessary, we explicitly set db_index on the field and add an appropriate comment so the next developer understands why we decided to keep it:

@@ -44,7 +44,8 @@

class Product(models.Model):

created_by = models.ForeignKey(

to=User,

on_delete=models.PROTECT,

related_name='+',

+ # Used to speed up user deletion.

+ db_index=True,

)

When someone else (or you in a couple of months) encounters this comment, they won't have to go through the entire process again.

💡 Takeaway

Always explicitly set db_index on ForeignKey and add a comment on how it's being used.

Partial Foreign Key Indexes

So far we covered two of three foreign keys - category and created_by. Here is the last one:

class Product(models.Model):

[...]

last_edited_by = models.ForeignKey(

to=User,

on_delete=models.PROTECT,

related_name='+',

null=True,

)

Just like the index on created_by, this index is used mostly for audit purposes. Nobody wants to query for products last edited by some user. However, we've been down this road before and we know this index is used when users are deleted, so we'll keep it. But, before we call it a day, there is something we can still do with this index.

To demonstrate we first need to add some data.

Create 100 users:

from django.contrib.auth.models import User

users = [

User.objects.create_user(

username=f'user{i}',

email=f'user{i}@email.com',

first_name=f'User {i}',

) for i in range(100)

]

Create 50 categories:

from catalog.models import Category

categories = [

Category.objects.create(

name=f'Category {i}',

) for i in range(50)

]

Create 1,000,000 products:

import random

from django.utils import lorem_ipsum

from catalog.models import Product

random.seed(8080)

Product.objects.bulk_create((

Product(

name=f'Product {i}',

description=lorem_ipsum.words(100),

category=random.choice(categories),

category_sort_order=i,

created_by=random.choice(users),

last_edited_by=random.choice(users) if i % 1000 == 0 else None,

) for i in range(1_000_000)),

batch_size=100_000,

)

Notice that only one in every 1,000 products has been edited.

Now that we have some data, let's have a look at the indexes:

catalog=# \di+ *product*

Schema │ Name │ Size

────────┼──────────────────────────────────────────────┼─────────

public │ catalog_product_created_by_id_4e458b98 │ 6440 kB

public │ catalog_product_last_edited_by_id_05484fb6 │ 6320 kB

public │ catalog_product_pkey │ 21 MB

public │ product_category_sort_order_uk │ 32 MB

There is one strange thing going on. If you don't spot this right away that's fine, most people don't.

Consider this query to check how many users we have in created_by and last_edited_by:

catalog=# SELECT

COUNT(created_by_id) AS created_by,

COUNT(last_edited_by_id) AS last_edited_by

FROM catalog_product;

created_by │ last_edited_by

────────────┼────────────────

1000000 │ 1000

Out of 1M rows, all products have a value in created_by, but only 1,000 rows have a value for last_edited_by - that's ~99.9% empty values! If that's the case, how come indexes on both these fields are the same size:

catalog=# \di+ *product*

Schema │ Name │ Size

────────┼──────────────────────────────────────────────┼─────────

public │ catalog_product_created_by_id_4e458b98 │ 6440 kB

public │ catalog_product_last_edited_by_id_05484fb6 │ 6320 kB

public │ catalog_product_pkey │ 21 MB

public │ product_category_sort_order_uk │ 32 MB

Both indexes are ~6MB, but one has 1M values and the other only 1K values. The reason both indexes are the same size is that in PostgreSQL, null values are indexed!

💡 Takeaway

Null values are indexed (In all major databases except Oracle).

I started my career as an Oracle DBA, where null values are not indexed. It took me some time (and a lot of expensive storage) until I realized that in PostgreSQL null values are indexed.

We have a foreign key column which is mostly used to validate the constraint and it is 99.9% empty. What if we could only index the rows which are not null? From PostgreSQL documentation on "partial index":

A partial index is an index built over a subset of a table; the subset is defined by a conditional expression [...]. The index contains entries only for those table rows that satisfy the predicate.

Exactly what we need! Let's replace the index on the ForeignKey with a partial B-Tree index:

@@ -22,6 +22,13 @@ class Product(models.Model):

class Meta:

+ indexes = (

+ models.Index(

+ name='product_last_edited_by_part_ix',

+ fields=('last_edited_by',),

+ condition=models.Q(last_edited_by__isnull=False),

+ ),

+ )

@@ -53,5 +60,7 @@ class Product(models.Model):

last_edited_by: User | None = models.ForeignKey(

on_delete=models.PROTECT,

related_name='+',

null=True,

+ # Indexed in Meta.

+ db_index=False,

)

We start by setting db_index=False to instruct Django we don't want the default index. We also make sure to add a comment saying that the field is indexed in Meta.

Next, we add a new index in Meta. What makes this index partial is the condition we added in the index definition:

models.Index(

name='product_last_edited_by_part_ix',

fields=('last_edited_by',),

condition=models.Q(last_edited_by__isnull=False),

)

This will make the index include only values which are not null. If we generate and apply the migration, these are the sizes of the indexes:

catalog=# \di+ *product*

Schema │ Name │ Size

────────┼────────────────────────────────────────┼─────────

public │ catalog_product_created_by_id_4e458b98 │ 6440 kB

public │ product_last_edited_by_part_ix │ 32 kB

public │ catalog_product_pkey │ 21 MB

public │ product_category_sort_order_uk │ 32 MB

The full index is ~6.4MB, the partial index only 32 kB. That's ~99.5% smaller.

💡 Takeaway

Use partial indexes when you don't need to index all the values in a column. Nullable foreign key columns are usually great candidates. If you are not convinced check out the story about The Unexpected Find That Freed 20GB of Unused Index Space.

Using Built-in Concurrent Index Operations

In the previous section we managed to save some $$$ by switching to a partial index. But with all the excitement we forgot something very very important - always check the generated SQL before applying migrations!

This is the migration:

class Migration(migrations.Migration):

dependencies = [

('catalog', '0005_alter_product_created_by'),

migrations.swappable_dependency(settings.AUTH_USER_MODEL),

]

operations = [

migrations.AlterField(

model_name='product',

name='last_edited_by',

field=models.ForeignKey(

db_index=False,

null=True,

on_delete=django.db.models.deletion.PROTECT,

related_name='+',

to=settings.AUTH_USER_MODEL

),

),

migrations.AddIndex(

model_name='product',

index=models.Index(

condition=models.Q(('last_edited_by__isnull', False)),

fields=['last_edited_by'],

name='product_last_edited_by_part_ix',

),

),

]

Looks about right. The SQL:

$ ./manage.py sqlmigrate catalog 0006

BEGIN;

--

-- Alter field last_edited_by on product

--

SET CONSTRAINTS "catalog_product_last_edited_by_id_05484fb6_fk_auth_user_id" IMMEDIATE;

ALTER TABLE "catalog_product" DROP CONSTRAINT "catalog_product_last_edited_by_id_05484fb6_fk_auth_user_id";

ALTER TABLE "catalog_product" ADD CONSTRAINT "catalog_product_last_edited_by_id_05484fb6_fk_auth_user_id"

FOREIGN KEY ("last_edited_by_id") REFERENCES "auth_user" ("id") DEFERRABLE INITIALLY DEFERRED;

--

-- Create index product_last_edited_by_part_ix on field(s) last_edited_by of model product

--

DROP INDEX IF EXISTS "product_last_edited_by_part_ix";

CREATE INDEX "product_last_edited_by_part_ix" ON "catalog_product" ("last_edited_by_id")

WHERE "last_edited_by_id" IS NOT NULL;

COMMIT;

Oh no! It's recreating the foreign key from scratch again. We already know what to do - use SeparateDatabaseAndState to only drop the index:

@@ -13,10 +13,20 @@ class Migration(migrations.Migration):

operations = [

- migrations.AlterField(

- model_name='product',

- name='last_edited_by',

- field=models.ForeignKey(db_index=False, null=True, on_delete=django.db.models.deletion.PROTECT, # ...

+ migrations.operations.SeparateDatabaseAndState(

+ state_operations=[

+ migrations.AlterField(

+ model_name='product',

+ name='last_edited_by',

+ field=models.ForeignKey(db_index=False, null=True, on_delete=django.db.models.deletion.PROTECT, # ...

+ ),

+ ],

+ database_operations=[

+ migrations.RunSQL(

+ 'DROP INDEX CONCURRENTLY catalog_product_last_edited_by_id_05484fb6;',

+ 'CREATE INDEX CONCURRENTLY catalog_product_last_edited_by_id_05484fb6 ON public.catalog_product USING btree (last_edited_by_id)',

+ ),

+ ],

The migration will now drop the index instead of re-creating the constraint.

Next, we want to create the partial index concurrently to not interrupt any live system. So far we used RunSQL for concurrent operations, but this time, we can use one of the built-in Django concurrent operations instead:

@@ -3,9 +3,11 @@

+from django.contrib.postgres.operations import AddIndexConcurrently

class Migration(migrations.Migration):

+ atomic = False

dependencies = [

('catalog', '0005_alter_product_created_by'),

@@ -28,7 +30,7 @@ class Migration(migrations.Migration):

),

],

),

- migrations.AddIndex(

+ AddIndexConcurrently(

model_name='product',

index=models.Index(

condition=models.Q(('last_edited_by__isnull', False)),

fields=['last_edited_by'],

name='product_last_edited_by_part_ix',

),

),

Django offers two special drop-in concurrent migration index operations for PostgreSQL:

AddIndex->AddIndexConcurrentlyRemoveIndex->RemoveIndexConcurrently

These operations are only available for PostgreSQL. For other databases you still need to use RunSQL.

The reason we couldn't use these operations for the implicit index on ForeignKey is that these operations can only be used for indexes defined in Meta.indexes, and not for implicitly created indexes or indexes created outside Django's context.

Order Migration Operations

So far we prevented the migration from re-creating the foreign key and made sure all index operations are concurrent. This is the current migration:

class Migration(migrations.Migration):

atomic = False

dependencies = [

('catalog', '0005_alter_product_created_by'),

migrations.swappable_dependency(settings.AUTH_USER_MODEL),

]

operations = [

migrations.operations.SeparateDatabaseAndState(

state_operations=[

migrations.AlterField(model_name='product', name='last_edited_by', field=models.ForeignKey(db_index=False, null=True, on_delete=django.db.models.deletion. #...

],

database_operations=[

migrations.RunSQL(

'DROP INDEX catalog_product_last_edited_by_id_05484fb6;',

'CREATE INDEX catalog_product_last_edited_by_id_05484fb6 ON public.catalog_product USING btree (last_edited_by_id)',

),

],

),

AddIndexConcurrently(

model_name='product',

index=models.Index(condition=models.Q(('last_edited_by__isnull', False)), fields=['last_edited_by'], # ...

),

]

If you pay close attention, this migration can still impact a live system. Consider the current order of migration operations:

- Drop full index

- Create partial index

Between the first and second steps, the system is left with no index. Keep in mind that we used concurrent operations, so we had to make the migration non-atomic. This means that all changes take effect immediately, and not at the end of the migration. This order of operations can have two consequences:

-

From the time the full index is dropped until the partial index is created, the system doesn't have an index. This can cause queries using the index to become slower.

-

If the migration fails between the first and second steps, the system will be left without an index until the migration is attempted again.

This solution to this headache is to simply switch the order of operations:

@@ -15,6 +15,10 @@ class Migration(migrations.Migration):

operations = [

+ AddIndexConcurrently(

+ model_name='product',

+ index=models.Index(condition=models.Q(('last_edited_by__isnull', False)), fields=['last_edited_by'], #...

+ ),

migrations.operations.SeparateDatabaseAndState(

state_operations=[

migrations.AlterField(

@@ -30,8 +34,4 @@ class Migration(migrations.Migration):

),

],

),

- AddIndexConcurrently(

- model_name='product',

- index=models.Index(condition=models.Q(('last_edited_by__isnull', False)), fields=['last_edited_by'], #...

- ),

]

First create the partial index and then drop the full index. This way, the system is never left without an index, and if the migration fails at any point, we still have at least one index.

💡 Takeaway

Adjust the order of migration operations to reduce the impact on a running application during the migration. It is usually better to create first, and drop after.

This wraps up the migrations part, on to actual business logic!

Locking Across Relations

Say we want to add a the ability to edit a product with the following requirements:

- Let a user update the name and description of a product

- Keep track of the user who last edited the product

- Only superusers can edit products created by other superusers

A naive implementation can look like this:

class Product(models.Model):

# ...

def edit(self, *, name: str, description: str, edited_by: User) -> None:

if self.created_by.is_superuser and not edited_by.is_superuser:

# Only superusers can edit products created by other superusers.

raise errors.NotAllowed()

self.name = name

self.description = description

self.last_edited_by = edited_by

self.save()

The edit function is implemented as an instance method on the Product model. It accepts the name and description to update, and the editing user. It performs the necessary permission check and moves on to set the required fields and save.

In some cases this is fine, and you can stop here. However, blindly operating on an instance like this is not always ideal:

- The copy you have in-memory may be stale

- Not safe against concurrent updates

If you are not convinced that concurrency is a real concern, check out How to Get or Create in PostgreSQL and Handling Concurrency Without Locks.

A solution more resilient to concurrent edits using a pessimistic approach can look like this:

from django.db import transaction

@classmethod

def edit(cls, id: int, *, name: str, description: str, edited_by: User) -> Self:

with transaction.atomic():

product = (

cls.objects

.select_for_update()

.get(id=id)

)

if product.created_by.is_superuser and not edited_by.is_superuser:

# Only superusers can edit products created by other superusers.

raise errors.NotAllowed()

product.name = name

product.description = description

product.last_edited_by = edited_by

product.save()

return product

These are the main differences:

-

Using a class method instead of an instance method: with an instance method we are operating on an already fetched object. To obtain a lock on the row we need to control how it's fetched from the database, so switching to

classmethod. -

Lock the row: to acquire a lock we must operate inside a database transaction. Then, to actually lock the row, we use

select_for_updatewhich resolves to aSELECT ... FOR UPDATESQL clause.

All this locking business can be pretty distracting. So distracting in-fact, that you can end up missing the most obvious optimization in Django, the optimization that appears in every "top 10 Django optimizations" list. I'm taking of course, about the all mighty select_related!

To implement the permission check we access the user that created the product:

if product.created_by.is_superuser and not edited_by.is_superuser:

# Only superusers can edit products created by other superusers.

raise errors.NotAllowed()

The user is not fetched in advance, so when we access it, Django will go the database and fetch it. Since we know in advance that we are going to access the user, we can tell Django to fetch it along with the product using select_related:

product = (

cls.objects

.select_for_update()

.select_related('created_by')

.get(id=id)

)

This will reduce the number of queries by one. However, there is a little gotcha here that can easily go unnoticed.

Consider the following scenario with two session operating at the same time:

>>> # Session 1

>>> with transaction.atomic():

... product = (

... Product.objects

... .select_for_update()

... .select_related('created_by')

... .get(id=1)

... )

...

...

... # transaction is still ongoing...

>>> # Session 2

>>>

>>>

>>>

>>>

>>>

>>> u = User.objects.get(id=97)

>>> u.is_active = False

>>> u.save()

# Blocked!

In this scenario, session 1 is in the process of editing a product. At the same time, session 2 is attempting to update the user and gets blocked. Why did session 2, which is not updating a product, gets blocked?

The reason session 2 got blocked is that SELECT ... FOR UPDATE locks rows from all the tables referenced by the query! In this case, when we added select_related we added a join to the query, to fetch both the product and the user who created it. As a result, the rows of both the product and the user who created it are locked! If someone happens to try to update a user while we update a product they created, they can get blocked.

⚠️ Implicit Behavior

By default, select_for_update locks the rows from all the referenced tables.

To avoid locking all the rows you can explicitly state which tables to lock using select_for_update:

@@ -80,6 +80,7 @@ class Product(models.Model):

product: Self = (

cls.objects

.select_related('created_by')

+ .select_for_update(of=('self', ))

.get(id=id)

)

self is a special keyword that evaluates to the queryset's model, in this case, product.

💡 Takeaway

Always explicitly state which tables to lock when using select_for_update. Even if you don't have select_related right now, you might have in the future.

Permissive No Key Locks

Previously we used select_for_update(of=('self', )) to avoid locking the user when we only want to lock and update the product. Now let's look at another scenario. This time, we want to insert a new product:

>>> Product.objects.create(

... name='my product',

... description='a lovely product',

... category_id=1,

... category_sort_order=999997,

... created_by_id=1,

... last_edited_by_id=None,

... )

<Product 1000001>

This works.

Now let's do it again, but this time, while another session is trying to update user 1:

>>> # Session 1

>>> with transaction.atomic():

... user = (

... User.objects

... .select_for_update(of=('self',))

... .get(id=1)

... )

... # transaction is still ongoing...

...

...

...

...

...

...

...

...

>>> # Session 2

>>>

>>>

>>>

>>>

>>>

>>>

>>> Product.objects.create(

... name='my product',

... description='a lovely product',

... category_id=1,

... category_sort_order=999998,

... created_by_id=1,

... last_edited_by_id=None,

... )

... # Blocked!

Session 1 locks user 1 for update. At the same time, Session 2 attempts to insert a new product which is created by the same user and it gets blocked! Why did it get blocked?

Imagine you are the database. You have one session locking the user and another trying to reference it. How can you be sure the first session is not going to change the primary key for this user? How can you be sure it's not going to delete this user? If Session 1 is going to do any of these things, Session 2 should fail. This is why the database has to lock Session 2 until Session 1 completes.

The two scenarios the database is concerned about are valid, but very rare in most cases. How often do you actually update the primary key of an object or a unique constraint that may be referenced by a foreign key? probably never. In PostgreSQL, there is a way to communicate that to the database, and obtain a more permissive lock when selecting for update, using FOR NO KEY UPDATE:

>>> # Session 1

>>> with transaction.atomic():

... user = (

... User.objects

... .select_for_update(no_key=True, of=('self',))

... .get(id=1)

... )

... # transaction is still ongoing...

...

...

...

...

...

...

...

>>> # Session 2

>>>

>>>

>>>

>>>

>>>

>>>

>>> Product.objects.create(

... name='my product',

... description='a lovely product',

... category_id=1,

... category_sort_order=999998,

... created_by_id=1,

... last_edited_by_id=None,

... )

... <Product 1000002>

By setting no_key=True, we tell the database we are not going to update the primary key of the user. The database can then acquire a more permissive lock, and the second session can now safely create the product.

💡 Takeaway

Use select_for_update(no_key=True) to select a row for update when not updating primary keys or unique constraints that are referenced by foreign keys. This will require a more permissive lock and prevent unnecessary locks when operating on referencing objects.

Going back to our Product model, to edit a product we used select_for_update to prevent concurrent updates from updating the product at the same time. By locking the row, we also accidentally prevent other referencing models from creating objects while we have the lock. In the case of model like Product, this can have a huge impact on the system.

Imagine you run an e-commerce website using this catalog. When a user makes a purchase you create an Order instance that references the product. Now imagine that every time someone updates a product, your system can't create orders. This is unacceptable!

To allow the system to create orders while a product is being updated, add no_key=True:

--- a/demo/catalog/models.py

+++ b/demo/catalog/models.py

@@ -80,7 +80,7 @@ class Product(models.Model):

product: Self = (

cls.objects

.select_related('created_by')

- .select_for_update(of=('self', ))

+ .select_for_update(of=('self', ), no_key=True)

.get(id=id)

)

Now we can safely edit a product without interfering with a live system.

The Final Model

It's been quite a ride from the naive model we started with, but here is the final model:

class Product(models.Model):

class Meta:

constraints = [

models.UniqueConstraint(

name='product_category_sort_order_uk',

fields=(

'category',

'category_sort_order',

),

),

]

indexes = (

models.Index(

name='product_last_edited_by_part_ix',

fields=('last_edited_by',),

condition=models.Q(last_edited_by__isnull=False),

),

)

id = models.BigAutoField(

primary_key=True,

)

name = models.CharField(

max_length=50,

)

description = models.TextField()

category = models.ForeignKey(

to=Category,

on_delete=models.PROTECT,

related_name='products',

# Indexed in unique constraint.

db_index=False,

)

category_sort_order = models.IntegerField()

created_by = models.ForeignKey(

to=User,

on_delete=models.PROTECT,

related_name='+',

# Used to speed up user deletion.

db_index=True,

)

last_edited_by = models.ForeignKey(

to=User,

on_delete=models.PROTECT,

related_name='+',

null=True,

# Indexed in Meta.

db_index=False,

)

@classmethod

def edit(

cls,

id: int,

*,

name: str,

description: str,

edited_by: User,

) -> Self:

with db_transaction.atomic():

product: Self = (

cls.objects

.select_related('created_by')

.select_for_update(of=('self', ), no_key=True)

.get(id=id)

)

if product.created_by.is_superuser and not edited_by.is_superuser:

# Only superusers can edit products created by other superusers.

raise errors.NotAllowed()

product.name = name

product.description = description

product.last_edited_by = edited_by

product.save()

return product

This model and the accompanying migrations are safe, resilient, and most importantly, production ready!

Takeaways

Here is a recap of the takeaways from this article:

- Don't use

unique_together, useUniqueConstraintinstead - Always explicitly set

db_indexand add a comment - Always check the SQL generated by migrations before applying them

- Provide reverse migration operations whenever possible

- Use concurrent index operations in busy systems

- Indexes on foreign keys are used indirectly by deletes

- Use partial indexes when possible

- Adjust the order of migration operations to reduce impact on live systems

- Explicitly state tables to lock when using

select_for_update - Use

no_key=Truewhen selecting a row for update to allow referencing objects to be created

The Mandatory AI Angle

Since it's 2025 you can't really have a serious article about technology that doesn't mention AI. So here is a funny story about AI and this exact article.

When I first presented this article in a talk at DjangoCon EU, I showed the final slide with all the takeaways and made a joke along the lines of "show me the LLM that can do that". Most people seemed to appreciate this little joke.

After the talk, a friend came to me and said "come man, let me show you something". He pulled his laptop and opened Cursor. He took my takeaways from the slide and put them in a rules file. He then had Cursor refactor one of his models.py files with my takeaways as guidelines... I can only say I had mixed feelings about the result.